Part I: Navigating a rapidly transforming environment

by Nikos Mattheos, DDS, MASc, PhD

With Artificial Intelligence entering every aspect of healthcare, the already increasingly digitized field of Implant Dentistry has become a testing ground for new tools. But what is in the core of this “artificial intelligence” revolution and how different is it really to what we have been doing so far? In this discussion we will dissect some fundamentals of what machine learning is and is not and review some common misperceptions and loopholes, before identifying what it is to know to thrive in the new era of practice ahead.

Let's start with a simple question...what is intelligence?

It’s something we all know, right? We can identify it by its manifestation, we can distinguish for example an “intelligent” person, but what is it exactly that makes someone intelligent? His ability to solve complex mathematical problems? His skills to lead arguments in a debate? Maybe writing a captivating novel? Inspiring people to unite in a common cause? Or simply the ability to survive a hostile environment? And what about “genious”? Is this also an expression of intelligence? Was Mozart intelligent? What about Nikos Galis? All these are valid approaches to what intelligence might entail, but none really can capture it in its entirety.

For most of the past century, intelligence was dominated by the ability to perform very specific tasks in the cognitive domains of mathematics. In 1912, the German the psychologist William Stern at University of Breslau described the intelligence quotient in an attempt to define and measure human intelligence, since then known as IQ test. Such tests included different verbal and non-verbal cognitive tasks with mathematical, geometrical or logical sequences and matrices. Although such tests could predict certain abilities, it was evident that they only relied on a very narrow and often heavily biased spectrum of expression of “intelligence”. Many scientists have disputed the value of IQ as a measure of intelligence altogether arguing that it oversimplifies abstract concepts into measurable entities. Personally, I see very little value in IQ tests, while there is a serious risk of bias, or even abuse such as eugonics or elite club memberships. My favourite quote here is attributed to Einstein: “if you judge a fish by its ability to climb a tree, it will spend its whole life thinking it is stupid”. Indeed, IQ tests assess the ability to climb a very specific tree, which to many of us in this world would be irrelevant. Friendly advice: device your growth path and don’t waste your time with IQ tests!

Today I would see our understanding of intelligence not as book learning, a narrow academic skill, or test-taking smarts. Rather, it reflects a broader and deeper resourcefullness to overcome obstacles in your environment, your ability to understand, make sense out of events and conditions, plan reactions and strategies, create, inspire, solve problems and flurish as a person and also help your community flourish too.

In addition, today mainstream psychology would recognise several expressions of intelligence including Emotional, Spatial, Bodily-Kinesthetic, Artistic, Linguistic, Interpersonal and Intrapersonal, Logical – Mathematical, Musical and more. Although every healthy human will be inherently able to demonstrate the above expressions of intelligence, it is also obvious that everyone’s performance might be different in each domain or combinations, thus allowing everyone a different path to survival and fulfillment. From elementary to genius, people would typically score low in one and high in another leading to different achievements from a Nobel-awarded physicist, to a top athlete or a genius creative artist.

And the million dollar question: Can we create a machine that possesses the above qualities and combinations that constitute “Intelligence”?

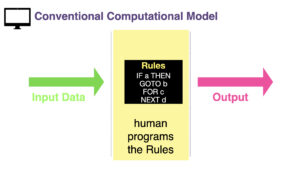

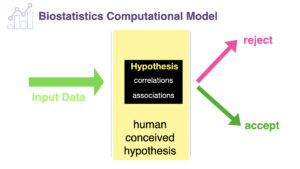

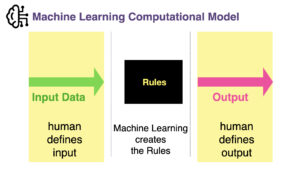

Maybe. That would be the Holy Grail of AI development often described as “Artificial General Intelligence” and we are not there yet (unless you are fans of the Terminator, then you know that Skynet reached that level on August 29, 1997, at 02:14 a.m., EDT!). What we already have established however, is algorithms or machines which can learn to perform tasks under many of the above domains of intelligence, often described as Machine Learning algorithms. “Learning”, being in itself an expression of intelligence, is at times rather abstract when used for humans. Studying to become a doctor for example, implies that you learn to perform certain tasks and competences. However, it also means that you will be prepared to deal with problems you were never trained for, or which you can hardly define. That you reflect on the outcomes of your decisions and you comment and criticise decisions of others. It also means that you develop your own logic, treatment philosophy and bias, often very different to what others develop under the same training that you had. Machine Learning on the other hand, refers to very well defined computational models, where a machine can be trained through a large amount of data and mathematical calculations to produce outcomes in response to input. As a computation, it is the result of complex mathematical modeling which is not something unique to machine learning, It has however however a fundamental difference to other complex computational models such as conventional computing or biostatistics.

In machine learning therefore, (especially in the “supervised” form) the human provides no rules to the computational model. Instead a large set of data is fed to the model, where each input is connected with one output. The computational model then, converts inputs and outputs to numerical values and with mathematical representations seeks to find the equations associate the two, thus creating from scratch the rules of how the two are connected. In other words, we give the machine large amounts of “A”s and “B”s, “Cause” and “Effects”, “Befores” and “Afters” and then the machine creates the set of rules that connect the two, which now can be used to predict future similar tasks.

How good will the algorithm developed by machine learning be to perform the respective task in real life? This is something one can only find out when testing it in real life. Each algorithm has some internal metrics that you will often hear as “mean absolute error loss”, “ROC Curves”, “mean average precision”, “cross entropy loss” or expressed as specificity/sensitivity. It essentially tells you how successful was the machine learning process in identifying patterns that could link the input and outcome conditions. But when everything is converted in numerical values, there can always be some equation that links this values. Whether this equation corresponds to a meaningful pattern, or would help us solve the problem we intended to, this is not something guaranteed. We see images, the algorithm sees a cloud of numerical values in a 3Dimensional space. The main advantage of the algorithm in spotting relations, is at the same time its main disadvantage. To find out if it works, we will have to test the algorithm in a real-life set of tasks.

Spot the hidden tank!

There is a widely spread anecdote among AI communities about the US military developing a machine learning algorithm which could identify camouflaged enemy tanks hidden in a forest. Photos of US and enemy tanks were fed in the machine learning model, , from different angles and views. The developers soon were excited to confirm that the machine learning algorithms could effectively distinguish between US and enemy tanks with very high precision, when however the software was tested by the army it failed to perform. Looking back to the training process, they then realised that the photos with the US tanks were taken in good conditions and ample sunlight, while the photos of the enemy tanks were mainly spycam snapshots from frontlines, taken in winter or cloudy conditions. The machine learning algorithm learned to distinguish between weather patterns and brightness in the photo, rather than the actual type of tanks... Regardless of the authenticity of the story, this issue is very concerning and has been repeatedly observed in machine learning applications in healthcare as well.

Covid-19 and Machine Learning Algorithms

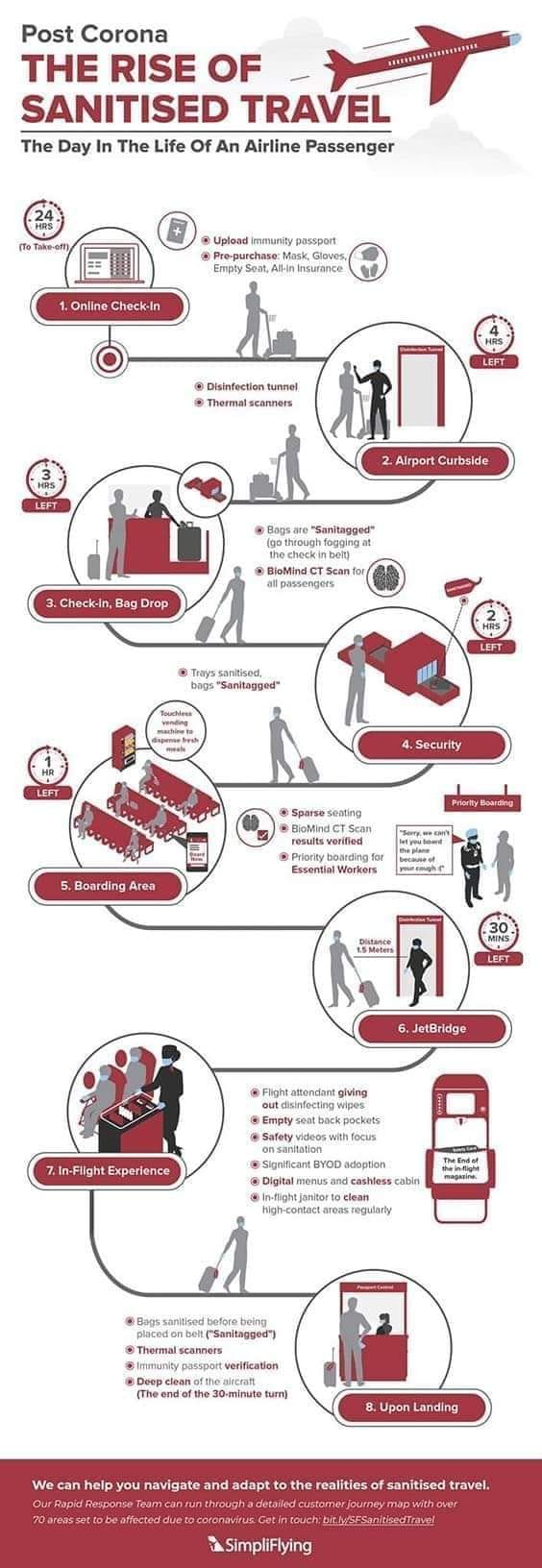

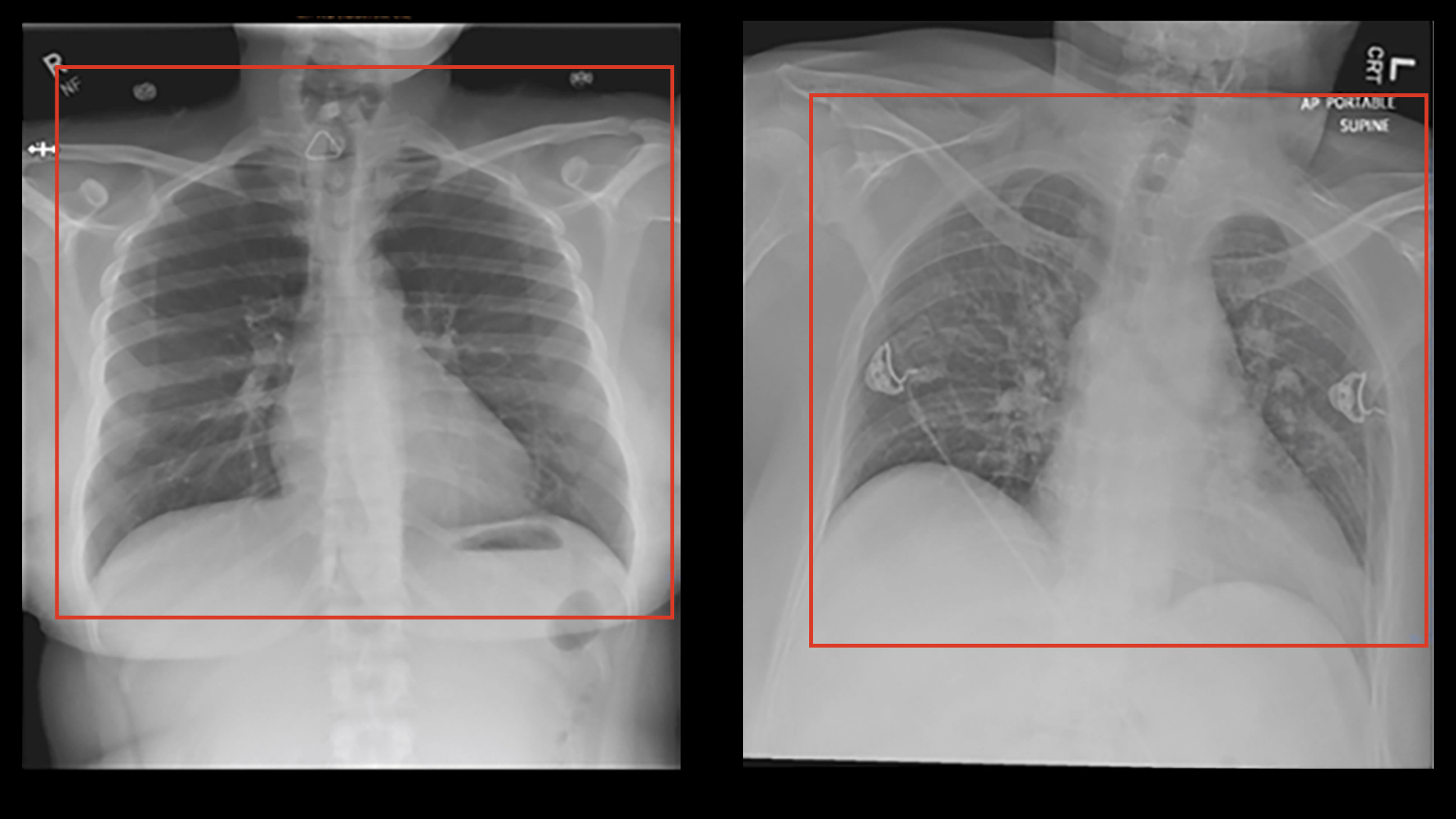

Back in May 2020, with Covid-19 everywhere on the rise and scarce resources to diagnose it, there was tremendous pressure for quick diagnostic or screening tests, which AI applications were hastily developed to satisfy. Several Machine Learning algorithms were trained to recognise signs of covid in chest radiographs and CT scans, scoring high in internal metrics of performance. On the basis of these, some advocated the fast implementation of mass scale CT-scans to air travelers as a quick and efficient screening. Luckily the dystopian travel process described in the image was never implemented. Only later was it actually shown that none of these algorithms was any good, despite promising internal precision. A paper in Nature Machine Intelligence (Roberts et al 2021) concluded that not one of the 62 models reviewed was any good in actual clinical application, with most of the flaws being identified in the process that was used to train the algorithms, which was plagued by deficiencies and bias. In one case for example the positive covid patients used to train the model were all bedridden, while the healthy controls were standing. The algorithm showed high internal precision in detecting Covid-19 on the basis of chest radiographs, but failed to perform in actual clinical setup. It was then realised that the algorithm had learned to identify if the patient was in lying or standing position, rather than the Covid-19 status.

Ironically, this could be one of AI’s most “human” features: “cutting corners”! Thus learning how to pass the exam, without having really studied the content! Regardless if your students are human or algorithms, there is a price to pay for bad teaching!

Remember back in the days of studying Biochemistry? Learning the content would imply understanding throusands of pages loaded with biochemical reactions… But luckily, passing the exams was limited to studying some old exams questions and learning well some “SOS” chapters! In the end we could pass the exams, how many could actually claim that we understand Biochemistry was not what it seemed…! There is a price to pay for bad teaching (and bad exams), regardless if your students are humans or algorithms…!

Spot the ...x differences..!

Bedridden patients were frequently x-rayed with portable devices and under different settings. The patients' position was not as symmetrical as the standing patients, with slight rotations and irregular placement in the frame. The radiographs were also sometimes marked with text indicating portable device and subpine position. In a very cool demonstration of street-smart intelligence, the machine learned how to pass the exam, but never really learned the content...! This can be a typical case of what we call "overfit" algorithms, where the machine learning has evaluated too specific patterns in the training sample, including irrelevant ones and noise, thus becomes increasingly unable to detect relevant patterns in new samples, although highly successful in the training samples.

There is one thing we can not know with products that claim to include machine learning and that is what content was used to train which model and how. Thus, until extensive clinical validation is available and can be presented and assessed, any such product has to be treated with extreme caution.

In conclusion, whether machine learning and artificial intelligence are synonyms is a long ongoing debate. Typically, I hear the term “machine learning” being most commonly used by scientists, while the term “AI” is frequented in lay discussions and marketing. Regardless of terms, machine learning is here to stay and it has the potential to truly transfrom the way we practice implant dentistry. From our side however, I see two important and urgent prerequisites: 1) an “AI literacy” of the dentist and healthcare practitioner which will empower him to assess products with AI claims and 2) a clear system of “AI labeling”, which will offer to the clinician consise and transparent information about the algorithm, how it was developed and its potential and limitations.

We are, yet again, just in the beginning of an exciting era !

References

- Roberts, M., Driggs, D., Thorpe, M. et al. Common pitfalls and recommendations for using machine learning to detect and prognosticate for COVID-19 using chest radiographs and CT scans. Nat Mach Intell 3, 199–217 (2021). https://doi.org/10.1038/s42256-021-00307-0

2 thoughts on “Artificial Intelligence, Machine Learning and Implant Dentistry!”

thank you for your insights! Indeed there are too many claims of “AI” in software and devices, hard to understand what it means…is there no requirements or anyone can just claim that his product includes or uses AI?

nice article.thanks